-

“Liquid Forensics” Checks Safety of Drinking Water

Ping! The popular 1990 film, The Hunt for Red October, helped introduce sonar technology on submarines to pop culture. Now, nearly thirty years later, a team of scientists is using this same sonar technology as inspiration to develop a rapid, inexpensive way to determine whether the drinking water is safe to consume.

-

-

If Aliens Call, What Should We Do? Scientists Want Your Opinion.

The answer to this question could affect all of our lives more than nearly any other policy decision out there: How, if it all, should humanity respond if we get a message from an alien civilization? And yet politicians and scientists have never bothered to get our input on it. Sigal Samuel writes in Vox that in the age of fake news, researchers worry conspiracy theories would abound before we could figure out how — or whether — to reply to an alien message.

-

-

Bipartisan, Bicameral Legislation to Tackle Rising Threat of Deepfakes

New bipartisan bill would require DHS secretary to publish annual report on the state of digital content forgery. “Deepfakes pose a serious threat to our national security, homeland security, and the integrity of our elections,” said Rep. Derek Kilmer (D-Washington), one of the bill’s sponsors.

-

-

Making the World Earthquake Safe

Can fake earthquakes help safeguard nuclear reactors against natural disasters? Visitors to this year’s Royal Society Summer Science Exhibition will be given the opportunity to find out for themselves thanks to new research.

-

-

Re-thinking Biological Arms Control for the 21st Century

International treaties prohibit the development and use of biological weapons. Yet concerns about these weapons have endured and are now escalating. Filippa Lentzos writes in a paper issued by the U.S. Marine Corps that a major source of the growing concern about future bioweapons threats stem from scientific and technical advances. Innovations in biotechnology are expanding the toolbox to modify genes and organisms at a staggering pace, making it easier to produce increasingly dangerous pathogens. Disease-causing organisms can now be modified to increase their virulence, expand their host range, increase their transmissibility, or enhance their resistance to therapeutic interventions. Scientific advances are also making it theoretically possible to create entirely novel biological weapons, by synthetically creating known or extinct pathogens or entirely new pathogens. Scientists could potentially enlarge the target of bioweapons from the immune system to the nervous system, genome, or microbiome, or they could weaponize ‘gene drives’ that would rapidly and cheaply spread harmful genes through animal and plant populations.

-

-

Largest-ever simulation of the Deepwater Horizon spill

In a 600-ft.-long saltwater wave tank on the coast of New Jersey, a team of NJIT researchers is conducting the largest-ever simulation of the Deepwater Horizon spill to determine more precisely where hundreds of thousands of gallons of oil dispersed following the drilling rig’s explosion in the Gulf of Mexico in 2010.

-

-

Global cybersecurity experts gather at Israel’s Cyber Week

The magnitude of Israel’s cybersecurity industry was on full show this week at the 9th Annual Cyber Week Conference at Tel Aviv University. The largest conference on cyber tech outside of the United States, Cyber Week saw 8,000 attendees from 80 countries hear from more than 400 speakers on more than 50 panels and sessions.

-

-

Any single hair from the human body can be used for identification

Any single hair from anywhere on the human body can be used to identify a person. This conclusion is one of the key findings from a nearly year-long study by a team of researchers. The study could provide an important new avenue of evidence for law enforcement authorities in sexual assault cases.

-

-

Rectifying a wrong nuclear fuel decision

In the old days, new members of Congress knew they had much to learn. They would defer to veteran lawmakers before sponsoring legislation. But in the Twitter era, the newly elected are instant experts. That is how Washington on 12 June witnessed the remarkable phenomenon of freshman Rep. Elaine Luria (D-Norfolk), successfully spearheading an amendment that may help Islamist radicals get nuclear weapons. The issue is whether the U.S. Navy should explore modifying the reactor fuel in its nuclear-powered vessels — as France already has done — to reduce the risk of nuclear material falling into the hands of terrorists such as al-Qaida or rogue states such as Iran. Luria says no. Alan J. Kuperman writes in the Pilot Online that more seasoned legislators have started to rectify the situation by passing a spending bill on 19 June that includes the funding for naval fuel research. They will have the chance to fully reverse Luria in July on the House floor by restoring the authorization. Doing so would not only promote U.S. national security but teach an important lesson that enthusiasm is no substitute for experience.

-

-

Deepfake detection algorithms will never be enough

You may have seen news stories last week about researchers developing tools that can detect deepfakes with greater than 90 percent accuracy. It’s comforting to think that with research like this, the harm caused by AI-generated fakes will be limited. Simply run your content through a deepfake detector and bang, the misinformation is gone! James Vincent writers in The Verge that software that can spot AI-manipulated videos, however, will only ever provide a partial fix to this problem, say experts. As with computer viruses or biological weapons, the threat from deepfakes is now a permanent feature on the landscape. And although it’s arguable whether or not deepfakes are a huge danger from a political perspective, they’re certainly damaging the lives of women here and now through the spread of fake nudes and pornography.

-

-

The history of cellular network security doesn’t bode well for 5G

There’s been quite a bit of media hype about the improvements 5G is set to supposedly bring to users, many of which are no more than telecom talking points. One aspect of the conversation that’s especially important to get right is whether or not 5G will bring much-needed security fixes to cell networks. Unfortunately, we will still need to be concerned about these issues—and more—in 5G.

-

-

Deepfakes: Forensic techniques to identify tampered videos

Computer scientists have developed a method that performs with 96 percent accuracy in identifying deepfakes when evaluated on large scale deepfake dataset.

-

-

AI helps protect emergency personnel in hazardous environments

Whether it’s at rescue and firefighting operations or deep-sea inspections, mobile robots finding their way around unknown situations with the help of artificial intelligence (AI) can effectively support people in carrying out activities in hazardous environments.

-

-

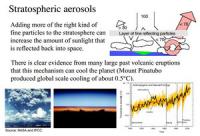

Geoengineer the planet? More scientists now say it must be an option

Once seen as spooky sci-fi, geoengineering to halt runaway climate change is now being looked at with growing urgency. A spate of dire scientific warnings that the world community can no longer delay major cuts in carbon emissions, coupled with a recent surge in atmospheric concentrations of CO2, has left a growing number of scientists saying that it’s time to give the controversial technologies a serious look. Fred Pearce writes in Yale Environment 360 that among the technologies being considered are a range of efforts to restrict solar radiation from reaching the lower atmosphere, including spraying aerosols of sulphate particles into the stratosphere, and refreezing rapidly warming parts of the polar regions by deploying tall ships to pump salt particles from the ocean into polar clouds to make them brighter.

-

-

Identifying a fake picture online is harder than you might think

Research has shown that manipulated images can distort viewers’ memory and even influence their decision-making. So the harm that can be done by fake images is real and significant. Our findings suggest that to reduce the potential harm of fake images, the most effective strategy is to offer more people experiences with online media and digital image editing – including by investing in education. Then they’ll know more about how to evaluate online images and be less likely to fall for a fake.

-

More headlines

The long view

New Technology is Keeping the Skies Safe

DHS S&T Baggage, Cargo, and People Screening (BCP) Program develops state-of-the-art screening solutions to help secure airspace, communities, and borders

Factories First: Winning the Drone War Before It Starts

Wars are won by factories before they are won on the battlefield,Martin C. Feldmann writes, noting that the United States lacks the manufacturing depth for the coming drone age. Rectifying this situation “will take far more than procurement tweaks,” Feldmann writes. “It demands a national-level, wartime-scale industrial mobilization.”

How Artificial General Intelligence Could Affect the Rise and Fall of Nations

Visions for potential AGI futures: A new report from RAND aims to stimulate thinking among policymakers about possible impacts of the development of artificial general intelligence (AGI) on geopolitics and the world order.

Keeping the Lights on with Nuclear Waste: Radiochemistry Transforms Nuclear Waste into Strategic Materials

How UNLV radiochemistry is pioneering the future of energy in the Southwest by salvaging strategic materials from nuclear dumps –and making it safe.

Model Predicts Long-Term Effects of Nuclear Waste on Underground Disposal Systems

The simulations matched results from an underground lab experiment in Switzerland, suggesting modeling could be used to validate the safety of nuclear disposal sites.