Law-enforcement technologyMachine can tell when a human being is lying

In a study of forty cases, a computer correctly identifies liars more than 80 percent of the time, a better rate than humans with the naked eye typically achieve in lie-detection exercises

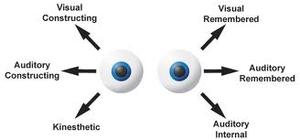

Eye position is a reliable indicator of truthfulness // Source: forumfree.it

Inspired by the work of psychologists who study the human face for clues that someone is telling a high-stakes lie, University of Buffalo computer scientists are exploring whether machines can also read the visual cues that give away deceit.

Results so far are promising: In a study of forty videotaped conversations, an automated system that analyzed eye movements correctly identified whether interview subjects were lying or telling the truth 82.5 percent of the time.

This is a better accuracy rate than expert human interrogators typically achieve in lie-detection judgment experiments, said Ifeoma Nwogu, a research assistant professor at UB’s Center for Unified Biometrics and Sensors (CUBS) who helped develop the system. In published results, even experienced interrogators average closer to 65 percent, Nwogu said.

“What we wanted to understand was whether there are signal changes emitted by people when they are lying, and can machines detect them? The answer was yes, and yes,” said Nwogu, whose full name is pronounced “e-fo-ma nwo-gu.”

A University of Buffalo release reports that the research was peer-reviewed, published and presented as part of the 2011 IEEE Conference on Automatic Face and Gesture Recognition.

Nwogu’s colleagues on the study included CUBS scientists Nisha Bhaskaran and Venu Govindaraju, and UB communication professor Mark G. Frank, a behavioral scientist whose primary area of research has been facial expressions and deception.

In the past, Frank’s attempts to automate deceit detection have used systems that analyze changes in body heat or examine a slew of involuntary facial expressions.

The automated UB system tracked a different trait — eye movement. The system employed a statistical technique to model how people moved their eyes in two distinct situations: during regular conversation, and while fielding a question designed to prompt a lie.

People whose pattern of eye movements changed between the first and second scenario were assumed to be lying, while those who maintained consistent eye movement were assumed to be telling the truth.

In other words, when the critical question was asked, a strong deviation from normal eye movement patterns suggested a lie.

The release notes that previous experiments in which human judges coded facial movements found documentable differences in eye contact at times when subjects told a high-stakes lie.

What Nwogu and fellow computer scientists did was create an automated system that could verify and improve upon information used by human coders to successfully classify liars and truth tellers. The next step will be to expand the number of